What is Conduit?

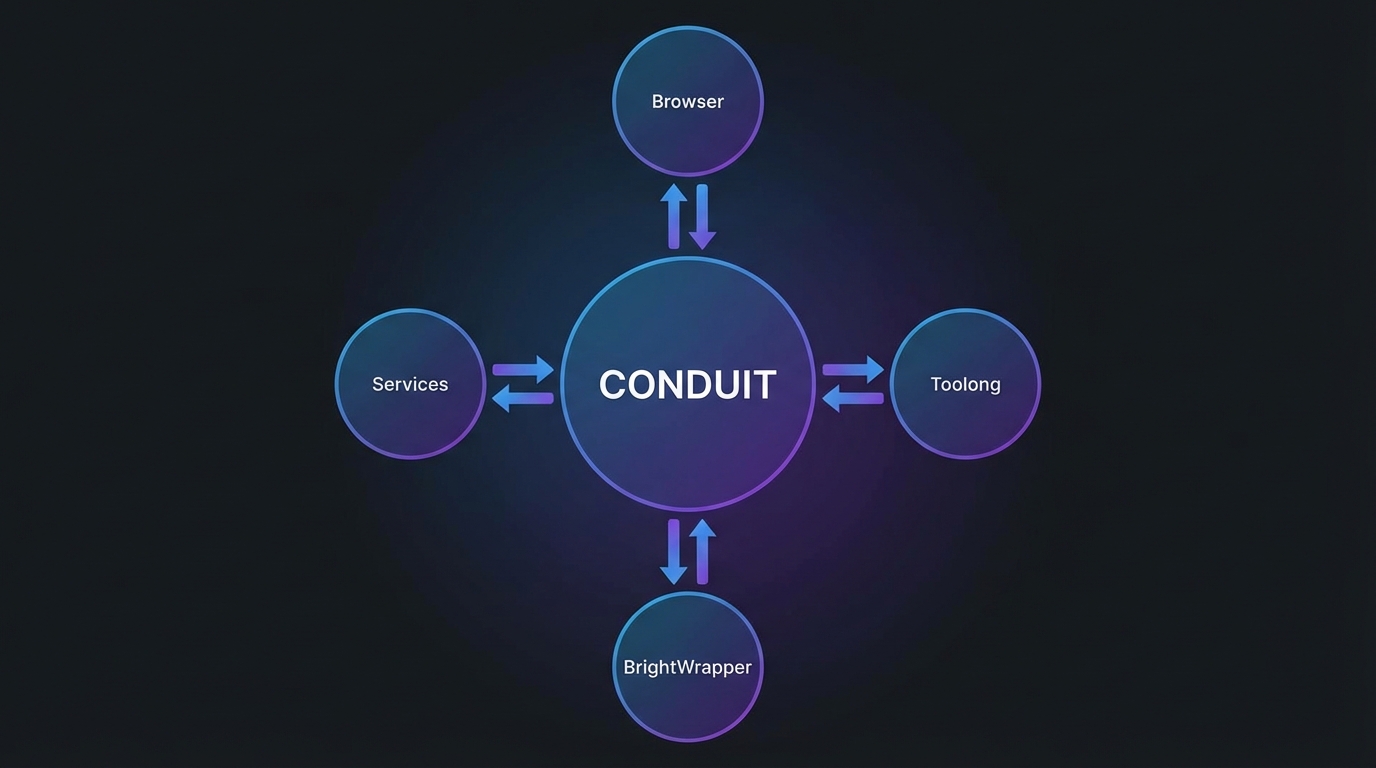

Conduit is a WebSocket-based message routing hub that acts as the central nervous system for your infrastructure. Instead of services talking directly to each other, all communication flows through Conduit.

Applications connect to Conduit once and can then communicate with any registered service. Conduit handles message routing, tracks request progress, and ensures real-time updates reach the right clients.

This decouples your frontend from your backends. Your browser doesn't need to know where the LLM service lives or how to handle reconnections - Conduit manages all of that.

Services register their capabilities when they connect. Clients send requests to service names, not URLs. Conduit routes the message to the right place and streams progress updates back.